Proxy Strength: Ways to Successfully Gather Proxies Without Payment

the ever-evolving digital scene, access to retrieve and collect information from multiple online sources is crucial for countless people and enterprises. However, with growing restrictions on data access and a growing number of anti-scraping measures, using proxies has become a key strategy for web scraping. Proxies serve as intermediaries that allow users to conceal their IP addresses, which simplifies to collect data without facing barriers or captchas. For anyone looking to delve into the world of web scraping, learning how to efficiently scrape proxies for free is an invaluable skill.

This tutorial aims to dissect the intricacies of proxy scraping, including the tools and techniques required to find, verify, and utilize proxies successfully. best tools to scrape free proxies will cover a range of topics from fast proxy scrapers to the best free proxy checkers you can find in 2025. Additionally, we’ll discuss key variances between various types of proxies, such as HTTP and SOCKS, and offer tips on how to gauge proxy speed and anonymity. Regardless of whether you are an expert developer or a beginner to web automation, this article will equip you with essential insights and resources to enhance your proxy usage for data extraction and web scraping.

Grasping Proxies

Proxies act as intermediaries between a individual and the internet, enabling them to forward requests and receive responses in a roundabout way. By using a proxy, users can mask their internet protocol addresses, thereby provides a level of confidentiality and security while navigating the web. This is especially useful for users looking to preserve privacy or access blocked content on the internet.

There are various types of proxies, such as Hypertext Transfer Protocol, Hypertext Transfer Protocol Secure, and Socket Secure. HTTP servers are designed exclusively for web traffic, while Socket Secure servers can handle any type of traffic, which makes them flexible for multiple use cases. In furthermore, SOCKS4 and Socket Secure version 5 vary in features, with SOCKS5 providing added security and support for authentication methods. Understanding these distinctions is crucial for selecting the appropriate proxy type for specific requirements.

When it comes to internet scraping and data scraping, proxy servers play a vital part in handling queries without target websites. They help in switching IP addresses, reducing the chance of being detected and ensuring that info gathering tasks run smoothly. Using efficient proxy server handling solutions can enhance data extraction effectiveness and help individuals obtain high-quality data from the web.

Proxy Scraping Methods

In the realm of scraping proxies, utilizing different techniques can significantly improve the effectiveness of the process. One successful approach is internet data extraction, where a dedicated proxy server tool can collect proxy locations from websites that provide complimentary proxies. These tools can be configured to focus on specific types of proxy servers, including HTTP or SOCKS, guaranteeing that individuals retrieve the proxies most tailored for their requirements. It is important to streamline this task using automation tools, which can consistently extract recent proxy lists and save time in hand-operated collection.

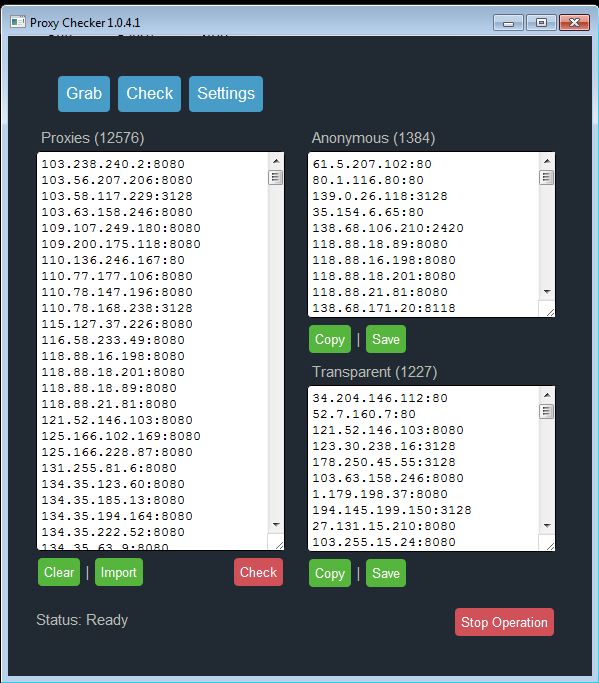

Another method involves using specific proxy server verification tools that not just collect proxies but additionally verify their functionality and performance. This combined approach allows individuals to assemble a reliable proxy list while eliminating non-functional or slow proxy servers from their collection. The top proxy server verifiers can swiftly ping each proxy server, check its anonymity level, and assess the response rate, ensuring that only the most qualified proxy servers are used for internet data extraction tasks.

Lastly, merging various sources for proxy extraction can lead to discovering superior proxies. Users can improve their proxy server collections by scraping data from discussion boards, blogs, and additional online platforms where proxy servers are often shared by individuals. By cross-referencing these sources with results from a fast proxy server tool, individuals can generate a strong and varied list of proxy servers that are ready for use in multiple uses, ranging from simple web data extraction to sophisticated automated tasks.

Premier Complimentary Proxy Providers

When looking for free proxy providers, specific websites have gained a reputation for offering dependable proxies that can be used for a multitude of uses. Websites like Free Proxy Lists, Spys.one, and Proxy Scrape offer frequently updated lists of complimentary proxies, including both http and SOCKS types. These directories are crucial for users who demand a steady stream of fresh proxies for data scraping or automation purposes. It is crucial to assess the reliability and performance of these proxies through user feedback and evaluation to ensure they satisfy your criteria.

Another great source for scraping proxies is community-driven platforms such as Reddit and focused forums. Users often publish their experiences on complimentary proxies, including detailed details about their performance, anonymity level, and reliability. Dedicated subreddits focused on data scraping and data collection are particularly valuable, as they combine community knowledge that can lead to discovering lesser-known options among less-known proxy sources.

Lastly, the GitHub platform is a valuable resource for public proxy collections and scraping tools. Many coders upload their code and proxy lists, enabling anyone to add or use their projects. Projects like ProxList and others provide premium proxies that are continuously updated. These sources not only supply directories but also utilities that combine scraping and checking features, making it simpler for users to find quick and safe proxies for multiple uses.

Proxy Server Verification and Assessment

Assessing and evaluating proxy servers is a vital stage to guarantee that they satisfy your criteria for data extraction and automated tasks. A trustworthy proxy server should also be operational but also be able of preserving anonymity and performance. To begin the validation procedure, it is crucial to use a good proxy checker that can effectively assess multiple proxy servers at once. By utilizing tools like ProxyStorm or additional web verification solutions, users can swiftly determine which proxy servers are operational and which ones to exclude.

After identifying the proxies are active, the next phase involves performance testing. Proxies can vary significantly in performance, which can affect the performance of your data extraction processes. Using a fast scraping tool, you can assess the response times of the proxies and sort them based on your specific speed requirements. This guarantees a more effective scraping method, as quick proxy servers will help accomplish tasks in a timely fashion, thus improving overall performance.

Lastly, evaluating for anonymity is another important component of proxy server verification. Various kinds of proxies offer varying levels of privacy, such as HTTP(s), SOCKS4, and SOCKS5 proxies. It is important to know the variations between these types and to check how well they hides your identity. By employing proxy testing tools, you can assess the level of anonymity provided by the proxy servers in your collection, ensuring that you leverage the highest quality proxy servers for safe and stealthy scraping activities.

SOCKS vs SOCKS Proxies

SOCKS proxy are chiefly built to handle web traffic and are often used for browsing the web. They operate at the software layer of the OSI model, making them suitable for HTTP and HyperText Transfer Protocol Secure queries. This type of proxy can cache content, meaning calls for commonly requested resources can be delivered faster. On the other hand, their limitation lies in their incompetence to process non-HTTP protocols, which limits their flexibility in certain uses.

SOCKS5 proxy, on the contrary, function at a lower level in the communication stack, enabling them to process a broader variety of standards, including HyperText Transfer Protocol, File Transfer Protocol, and even mail traffic. This makes SOCKS proxy more versatile for a variety of applications beyond basic web navigation. There are a couple of main types: SOCKS Version 4 and SOCKS5, with the second offering added features like support for User Datagram Protocol and improved authentication approaches, making it a preferred option for users who need more sophisticated safety and adaptability.

When choosing between SOCKS versus SOCKS proxy, evaluate your exact requirements. If internet scraping or navigation is your chief interest, HyperText Transfer Protocol proxies can be an efficient choice. However, for applications requiring support for various protocols or enhanced security features, SOCKS5 could be the superior option. Grasping these differences can assist you choose the right proxy type for your needs in internet scraping or automated tasks.

Automating with Proxies

Proxy servers play a key role in automating tasks that involve web scraping or data extraction. By using proxies, users can bypass geo-blocks, access rate-limited websites, and avoid IP bans. Tools like HTTP proxy scrapers and SOCKS5 proxy checkers help automate the process of gathering and validating proxies, ensuring that the automation operates without hiccups without interruptions. This is particularly advantageous for companies and developers who depend on scraping for research or analyzing data.

To efficiently use proxy servers for automation, it is essential to select premium proxies. Free proxies might be attractive, but they often come with drawbacks such as slow speeds and unreliability. For enterprises aiming for efficiency, investing in a fast proxy scraper or a comprehensive proxy verification tool can result in better performance. This guarantees that automated tasks can be executed swiftly, maximizing productivity without the annoyance of dealing with ineffective proxies.

When integrating proxy use into automation workflows, evaluating for proxy speed and privacy becomes crucial. A reliable proxy checker can provide insights into how well a proxy performs under different conditions, allowing individuals to choose the most suitable proxies for their specific needs. This can greatly enhance the efficiency of SEO tools that utilize proxy usage, ensuring that automation methods yield the intended results and maintaining confidentiality and security.

Conclusion and Best Practices

In conclusion, efficiently scraping proxies for free can significantly enhance your data extraction efforts and automation tasks. By utilizing the appropriate proxy scrapers and checkers, you can build a reliable proxy list that meets your needs for performance and privacy. It's important to identify your particular use case, whether that involves extracting data for SEO or performing massive web crawls, to select the best tools at your disposal.

Best practices include frequently updating your proxy list to guarantee high accessibility and speed, using a combination of HTTP and SOCKS proxies according to your project requirements, and confirming the privacy of your proxies. Additionally, investing time in learning how to properly configure your proxy settings and understanding the differences between public and private proxies can lead to better performance and reduced risk of IP blocking.

In conclusion, always prioritize quality over numerical superiority when it comes to proxies. Utilizing providers known for offering high-quality proxies will yield superior results than scraping random lists online. By following these guidelines, you not only enhance the efficacy of your web scraping efforts but also maintain a smooth and uninterrupted automation process.